With AX2012 you can setup the SharePoint sites named "Enterprise Portal". These sites run perfectly well on the free SharePoint Foundation version, and it also runs on SharePoint Foundation 2013 if you have the necessary updates. In this post I will discuss some considerations for supporting multiple environments, which might be needed if you want to support some development and testing scenarios in addition to a production environment.

Installing and configuring SharePoint is in many aspects a separate skill set, so I totally get why IT Pros prefer to leave that to dedicated SharePoint consultants. Having said that, if you just need to setup Enterprise Portal for the purpose of supporting Role Centers and giving your AX2012 install a nice looking Home page, then your SharePoint install doesn't have to be too complicated.

I will use Foundation as example, but SharePoint Server 2013 (Standard or Enterprise) obviously works with AX2012 as well. Foundation is free, but does have the necessary features for supporting Dynamics AX Enterprise Portal with it's role centers. If you plan for utilizing Power BI, OData or any of the more advanced features, you should know that upgrading Foundation to Standard or Enterprise is not supported.

Assuming you're starting with a blank server, you can download SharePoint Foundation 2013 with SP1 and begin installing the prerequisites. If any of the prerequisites doesn't install successfully, you can browse through the log file and look for the download URL and try install the failed component manually. I've experienced having to install prerequisites manually before. Eventually, you should have the necessary binaries installed and you are ready for installing SharePoint binaries.

I recommend you do not run the Configuration Wizard just yet. Rather continue with installing the updates for SharePoint. Head over to the overview of updates and download the most recent cumulative update and install it. With the latest updates installed, you are ready to initialize your SharePoint Foundation 2013 Farm.

A couple of points here:

Before installing the very first Enterprise Portal, I recommend the following:

Installing and configuring SharePoint is in many aspects a separate skill set, so I totally get why IT Pros prefer to leave that to dedicated SharePoint consultants. Having said that, if you just need to setup Enterprise Portal for the purpose of supporting Role Centers and giving your AX2012 install a nice looking Home page, then your SharePoint install doesn't have to be too complicated.

I will use Foundation as example, but SharePoint Server 2013 (Standard or Enterprise) obviously works with AX2012 as well. Foundation is free, but does have the necessary features for supporting Dynamics AX Enterprise Portal with it's role centers. If you plan for utilizing Power BI, OData or any of the more advanced features, you should know that upgrading Foundation to Standard or Enterprise is not supported.

Assuming you're starting with a blank server, you can download SharePoint Foundation 2013 with SP1 and begin installing the prerequisites. If any of the prerequisites doesn't install successfully, you can browse through the log file and look for the download URL and try install the failed component manually. I've experienced having to install prerequisites manually before. Eventually, you should have the necessary binaries installed and you are ready for installing SharePoint binaries.

I recommend you do not run the Configuration Wizard just yet. Rather continue with installing the updates for SharePoint. Head over to the overview of updates and download the most recent cumulative update and install it. With the latest updates installed, you are ready to initialize your SharePoint Foundation 2013 Farm.

A couple of points here:

- Consider the account you are using to install the SharePoint farm. Typically this account is referred to the SharePoint Setup and Farm account, and you use it again to configure the farm and potentially install more SharePoint servers into the same Farm. You may need to share the credentials of this account with other IT Pros, so avoid using your own personal account.

- Normally you want a dedicated service account for SharePoint. This is an unattended account that has broad permissions on SharePoint. The administration web application will run under this account.

- It is also normal to have a dedicated service accounts for several of the various services you can setup in SharePoint, but that is out of scope for this article.

Before installing the very first Enterprise Portal, I recommend the following:

- Install AX2012 Client and Management Utilities. Point the configuration to the environment you want to install the first SharePoint site. Both the local configuration and the business connector configuration should be pointing correctly and have working WCF configurations.

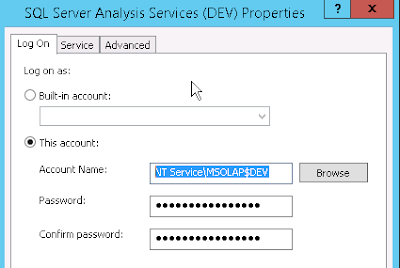

- From the SharePoint Administration Site, you need to manually create a new "Managed Account". From the Home page, under Security and General Security, you will find "Configure managed accounts" and from there you can register the business connector account as a new managed account. The SharePoint sites running Enterprise Portal needs to run under this managed account.

- From the SharePoint Administration Site you also need to start the Claims to Windows Token Service (aka C2WTS). From Home, under System Settings and Servers you will find "Manage Services on Server". Locate the C2WTS and start it from here. If you start this from Services under Windows Administrative Tools (Control Panel) and not from SharePoint itself, the service will be in a faulty state and you'll get in trouble when installing Enterprise Portal. Trust me, I've been there.

- Make sure Dynamics AX local configuration and business connector configuration points to right AOS, and do not forget to refresh and save the WCF configuration to this config.

- Create a new Web Application. Each Portal needs to run on its own Web Application and isolated application pool. Give it a good name, both the site and the application pool. Also give the Content Database a correlating and good name. The managed account must be the one you created earlier using the business connector account. I like to put these sites on ports like 81, 82, 83, etc.

- When the application is created, you are ready to install Enterprise Portal using AX2012 Setup. On the step where you select Web Application you choose the one you created in step 2. Give the site a good name, like "DynamicsAXDev" or something that makes it easy to understand what environment this site will support. Imagine looking at the URL in the browser and you can easily see from the address what environment you're currently at.

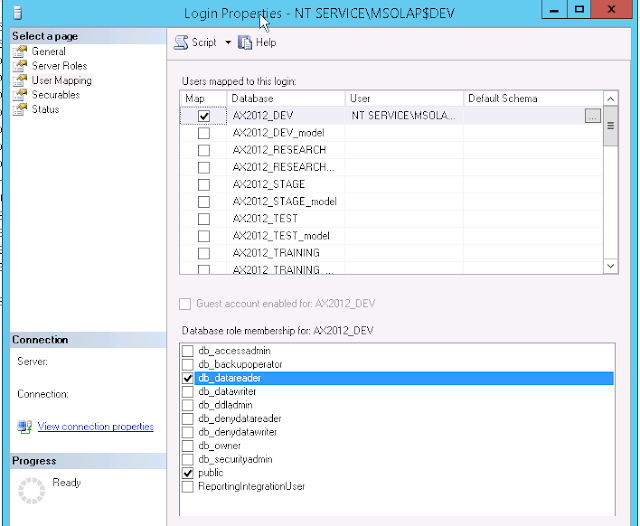

- Assuming the installation at step 3 went through successfully, your next step is to make sure the new site always connects to the right environment. Copy a working AX Configuration file (AXC-file) locally to the server. Make sure it has an updated and correct WCF-configuration in it. I tend to put the file under c:\inetpub\wwwroot\. Give it a good name (like DEV.axc). I know the official documentation says the file can be on a UNC-share, but I never got that to work, so a local file seems to work ok. Finally, you need to put a reference to this file inside the web.config for this particular Web Application. The file is normally located under C:\inetpub\wwwroot\wss\VirtualDirectories\81 (given this application runs on port 81). Open the file in some notepad or text editor and put in a new XML section:

![]()

Now you can be sure the Web Application points to the right environment independently of whatever is changed in the business connector configuration settings on this server. I put the section after the System.Web section. - Finally, I recommend loading the site itself and edit the Site Permissions. You probably want to make sure either Domain Users or some dedicated AD User Group has at least Read permissions.

You can repeat these five steps for each environment you want to support. How cool is that?

Now, what if you need to copy the AX2012 data between the environments? Well, that can be a problem, because when you install Enterprise Portal, setup connects to the AOS and adds data to the database. These data include the URL and the unique ID of the site you installed. If you start copying data around, you might end up with multiple environments pointing at the same Enterprise Portal, and this Portal points to just one of the AOSes, and that isn't very helpful.

We need to fix that! :-)

Use this PowerShell command to identify the unique ID (GUID) for each site:

Get-SPSite http://fancysharepointserver:81/sites/dynamicsaxtest |

Select -ExpandProperty AllWebs |

where {$_.Url -notmatch "dynamicsaxtest/"} | ft -a ID, Url

This will reveal the ID, and you can copy it over to the following SQL command:

DECLARE

@EPURL AS VARCHAR(255),

@EPGUID AS VARCHAR(255)

SELECT

@EPGUID = 'e3b7b289-cb17-4c38-8e98-858181af88a5' ,

@EPURL = 'http://fancysharepointserver:81/sites/dynamicsaxtest'

UPDATE EPGLOBALPARAMETERS

SET HOMEPAGESITEID = @EPGUID,

DEVELOPMENTSITEID = @EPGUID

WHERE KEY_ = 0

UPDATE EPWEBSITEPARAMETERS

SET INTERNALURL = @EPURL,

EXTERNALURL = @EPURL,

SITEID = @EPGUID

WHERE COMPANYID = 'DAT'

The URL and GUID above is just examples, and will obviously differ from your environment, but you get the idea.

Now save the SQL Command and make sure to include it in your routines when copying data from one environment to another.

With all of this, you should be good to go and able to have multiple SharePoint applications running Enterprise Portals for different environments, all on the same server.